Generative AI tools are becoming increasingly common, offering claims of boosting productivity by generating content and synthesizing information. However, the rise of these applications brings with it a greater risk of organizational data leaks. Many AI tools operate via web clients and can be accessed without the need for authentication. This makes it crucial for employees to only use services that have been approved by their company, especially when handling sensitive corporate data. Sharing such information with unapproved apps can lead to unintended consequences.

When I mention “unapproved applications,” I’m not implying these tools are unsafe. Instead, this term refers to any apps not approved by the organization for official use, including those from well-known vendors. There are several reasons a company might restrict access to widely trusted tools—whether due to data privacy regulations in their industry, GDPR compliance, or simply wanting tighter control over which apps employees can use.

This article highlights steps to secure Microsoft 365 data from unapproved generative AI apps. Some solutions might require premium or add-on licenses.

Preventing End Users from Granting Consent to Third-Party Apps

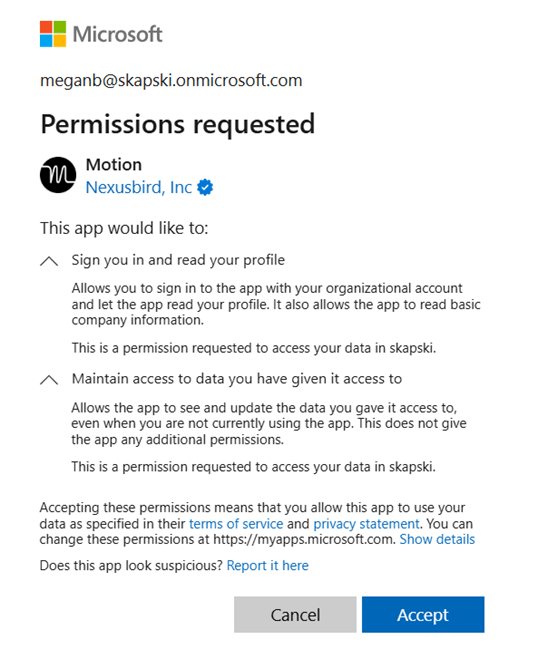

Unless an organization has Security Defaults enabled, users can often consent to apps accessing company data on their behalf. This means that even non-admin users can give third-party AI tools permission to access their data. Figure 1 illustrates the experience for end users when granting consent to a third-party app in Microsoft Entra.

If Security Defaults aren’t enabled for your organization, you should consider turning them on or restricting users’ ability to create app registrations or give consent to applications by default. This article looks into Security Defaults and whether they’re the right choice for your organization.

Managing Endpoints and Cloud Apps to Prevent Data Leaks in Generative AI Tools

Microsoft 365 administrators should look into managing devices with Microsoft Intune and using tools like Defender for Endpoint, Defender for Cloud Apps, and Endpoint DLP to monitor or block unapproved activity in generative AI applications. Intune-onboarded devices can be seamlessly integrated with these solutions, making it easier for admins to manage devices, onboard them into Endpoint DLP, and enable ongoing reporting in Defender for Cloud Apps—all from a single management hub without needing extra tools like a log collector or Secure Web Gateway.

Auditing or Blocking Unapproved Generative AI Applications

Microsoft Defender for Endpoint and Defender for Cloud Apps work hand in hand to audit or block access to generative AI tools, even without devices being connected to a corporate network, VPN, or jump box to filter traffic.

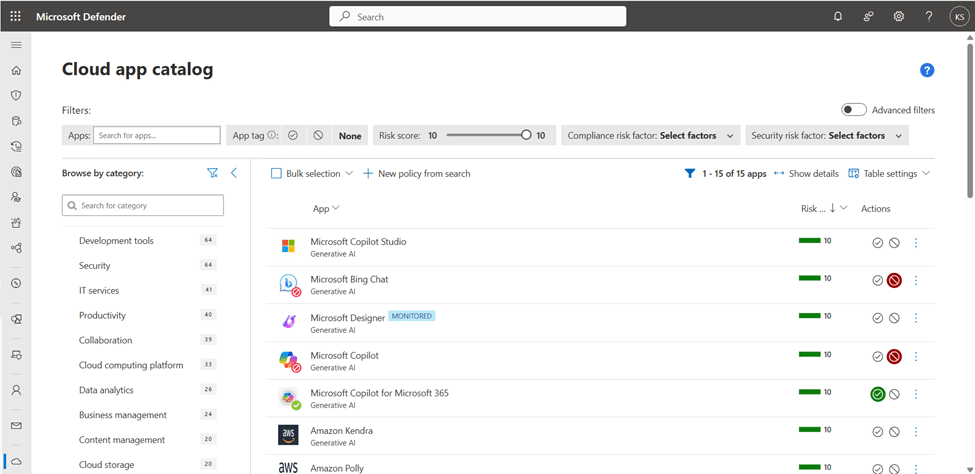

Figure 2 illustrates the Cloud App Catalog in Defender for Cloud Apps, which includes over 31,000 cloud apps and assesses their enterprise risk based on factors like regulatory certifications, industry standards, and Microsoft’s best practices. For instance, apps can be flagged for risks like a recent data breach, lacking a trusted certificate, or missing HTTP security headers. Organizations can also customize risk score metrics based on their specific needs. The apps can be filtered by categories, such as Generative AI.

Organizations might block or monitor apps for reasons unrelated to their risk score. In the example provided, Microsoft Designer is being monitored (maybe to track its usage), while Bing Chat and Microsoft Copilot are blocked. Even though both tools fall under the “Copilot” branding, they are accessed via different URLs. A real-world case for blocking Bing Chat and Microsoft Copilot could be GDPR compliance, as Bing Chat doesn’t keep data within the EU Data Boundary, while Copilot for Microsoft 365 does.

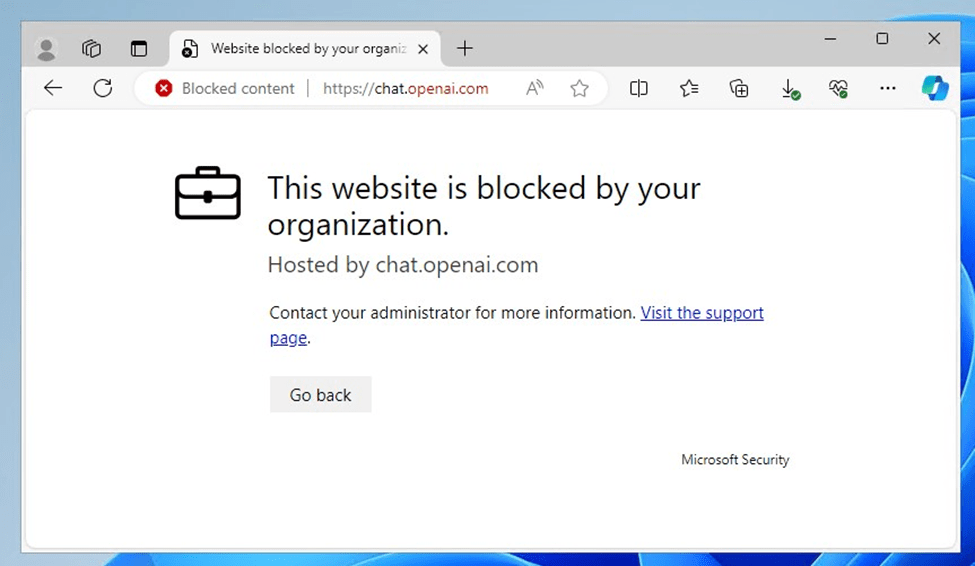

In the Cloud App Catalog, administrators can label applications as monitored, sanctioned (approved), or unsanctioned (blocked). Figure 2 shows different types of tags. When an application is marked as unsanctioned, users will encounter the experience shown in Figure 3.

With new cloud apps constantly being released, it might feel like trying to block unauthorized apps is a never-ending game of “Whac-a-Mole,” where as soon as you deal with one, another appears. Fortunately, you don’t have to manually tag each app.

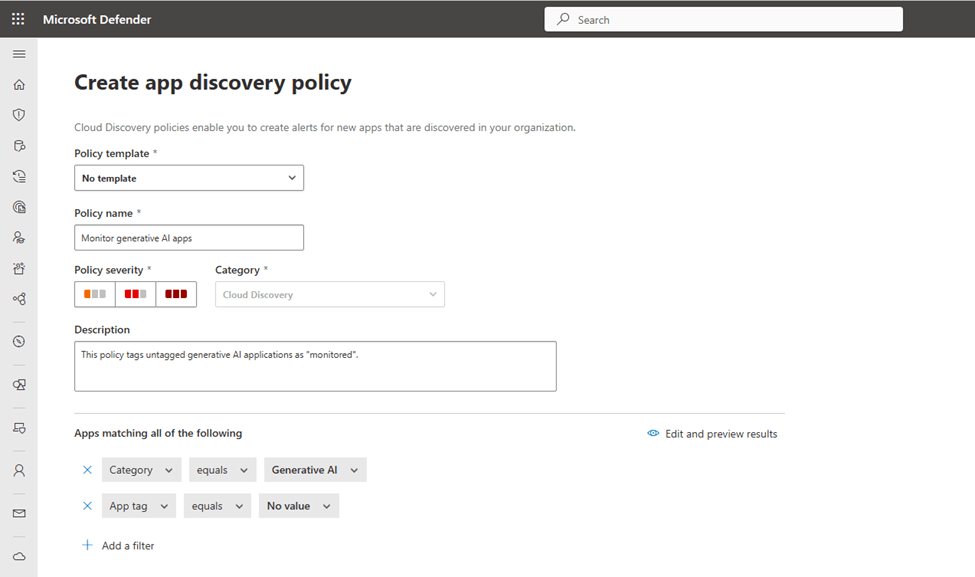

To set up an automatic app discovery policy for generative AI tools, go to Defender for Cloud Apps > Policy Management (under “Policies”) and select Create. In the policy settings, under “Apps matching all of the following,” choose “Category equals Generative AI” and “App tag equals No value” (see Figure 4). This will help automate the process of identifying and tagging generative AI apps.

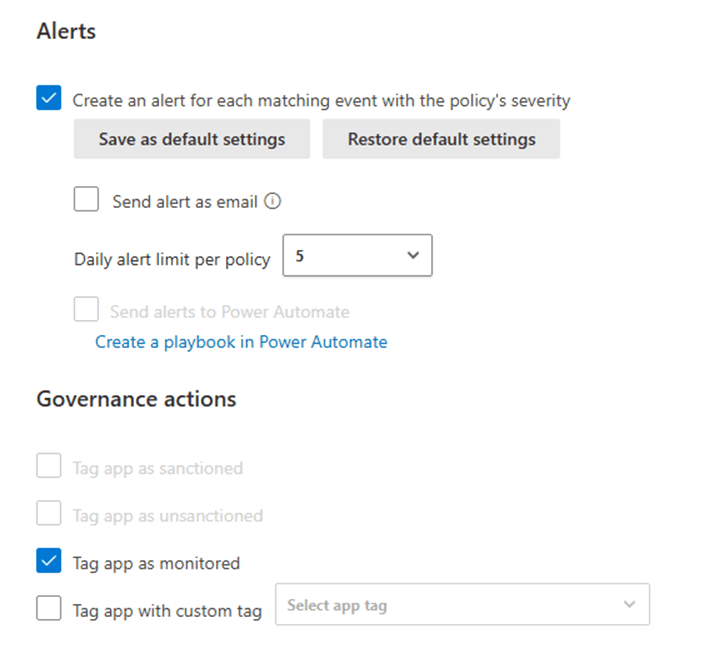

As shown in Figure 5, administrators can set up alerts for events that match the policy’s severity and set a daily alert limit (between 5 and 1000). Alerts for newly discovered generative AI applications can be sent directly to administrators via email or integrated with Power Automate to trigger a workflow, such as sending alerts to an IT ticketing system.

Under “Governance actions,” you can choose to “Tag app as monitored” or “Tag app as unsanctioned,” depending on whether you want to automatically monitor or block generative AI apps that haven’t been manually tagged.

When endpoints are managed by Defender, continuous reports can be accessed through Cloud Discovery. The Cloud Discovery dashboard highlights all newly discovered apps from the past 90 days. To review these apps, go to the Discovered Apps page. While the interface is similar to the Cloud App Catalog, it’s worth noting that the Generative AI category filter isn’t available here.

To export all data from the Discovered Apps page, select Export > Export data. If you just need a report listing the domains of newly discovered apps, choose Export > Export domains.

Preventing Data Transfer to Unauthorized Apps

Without proper controls, employees can easily copy, paste, or upload company data to web applications. Blocking all copying and pasting isn’t practical, so it’s better to selectively block these actions for sensitive data.

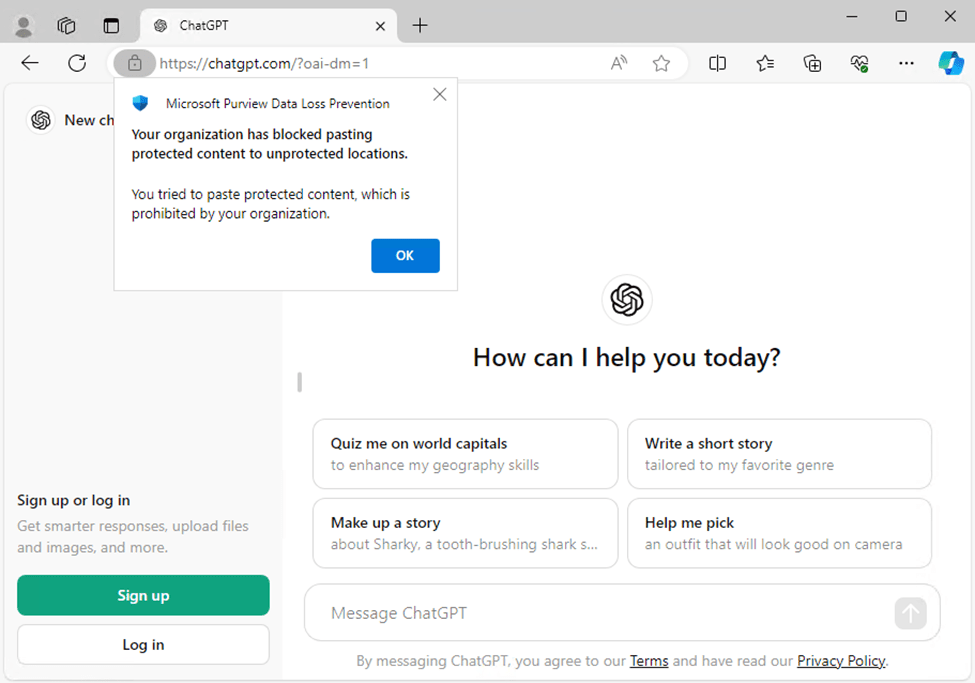

Microsoft Purview DLP, paired with Defender for Endpoint, can audit and prevent the transfer of organizational data to unauthorized locations (e.g., websites, desktop apps, removable storage) without needing extra software on the device. Purview DLP can also limit data sharing with unapproved cloud apps and services, like unauthorized generative AI tools (as shown in Figure 6).

While Microsoft Edge natively supports Purview DLP, Chrome and Firefox require the Purview extension. To ensure these browsers are compliant, you can configure the extension through Intune. Additionally, it’s a good idea to block access to unsupported browsers like Brave or Opera, which might be used to bypass DLP policies.

To install the Purview extension for Chrome or Firefox on Intune-managed Windows devices, you’ll need to create configuration profiles in Intune. Detailed instructions are available in Microsoft’s documentation for both Chrome and Firefox.

Blocking access to unsupported browsers (using conditional access policies in Entra ID, for example) isn’t enough to fully secure your data. Without the Purview extension, employees can still paste sensitive information into those browsers. It’s better to block unsupported browsers altogether, which can be done on modern Windows devices using Windows Defender Application Control.

Another option for securing cloud data is Defender for Cloud Apps Conditional Access App Control. This solution lets you create session policies that block copying and pasting in supported applications. However, I personally believe Endpoint DLP offers stronger protection. While Defender for Cloud Apps focuses on governing data interactions within cloud applications, Endpoint DLP provides device-level security, ensuring sensitive data is protected regardless of the app being used.

Control Copying Corporate Data to Personal Cloud Services or Removable Storage

To prevent employees from copying corporate data to personal cloud services or removable storage devices, administrators can set up App Protection Policies in Intune. These policies help control how users share and save data.

Endpoint DLP takes it a step further by monitoring and controlling data activity across devices, detecting and blocking any unauthorized attempts to transfer or copy sensitive information. While App Protection Policies are mainly focused on securing data within mobile apps, Endpoint DLP provides a broader control over sensitive data movement across all endpoints.

Prevent Screenshots of Sensitive Data

You can block screenshots on mobile devices by setting up device configuration profiles in Intune. However, Microsoft doesn’t offer an ideal solution for preventing screenshots on desktops, and there’s no way to block people from taking photos of screens with their phones.

To block screen captures on desktop operating systems, administrators can use Purview Information Protection sensitivity labels. These labels allow you to encrypt documents, sites, and emails either manually or based on detected sensitive content. In the sensitivity label’s encryption settings, administrators can configure usage rights. By removing the Copy (or “EXTRACT”) usage right, screenshots of labeled content can be blocked.

I recommend using this approach carefully if your organization uses Copilot for Microsoft 365 with sensitive documents. Without the View and Copy usage rights, Copilot’s functionality may be limited.

Still, it’s important to remember that users can always bypass security controls by simply photographing their screens if they really want to.

Safeguarding Corporate Data in the Age of AI

With the rise of generative AI, it’s more crucial than ever to use information security tools to protect corporate data. Microsoft 365 administrators and organizations must implement policies that best suit their needs. However, be mindful not to make security so restrictive that it hinders productivity.